Throughout our diverse explorations at the VIP studio, one commonality throughout is storytelling.

Find below, blog entries from our collaborators, and how through their work at the studio and their stories were brought to life.

Dr Nina Willment: Evaluating the use of Virtual and Immersive Production (VIP) for climate storytelling and engaging audiences with questions of sustainability

Investigating the challenges and opportunities of using virtual and immersive production technologies as a medium for telling sustainability and climate change related stories. Understanding the practice of ‘creative making’ as an artistic and immersive research methodology.

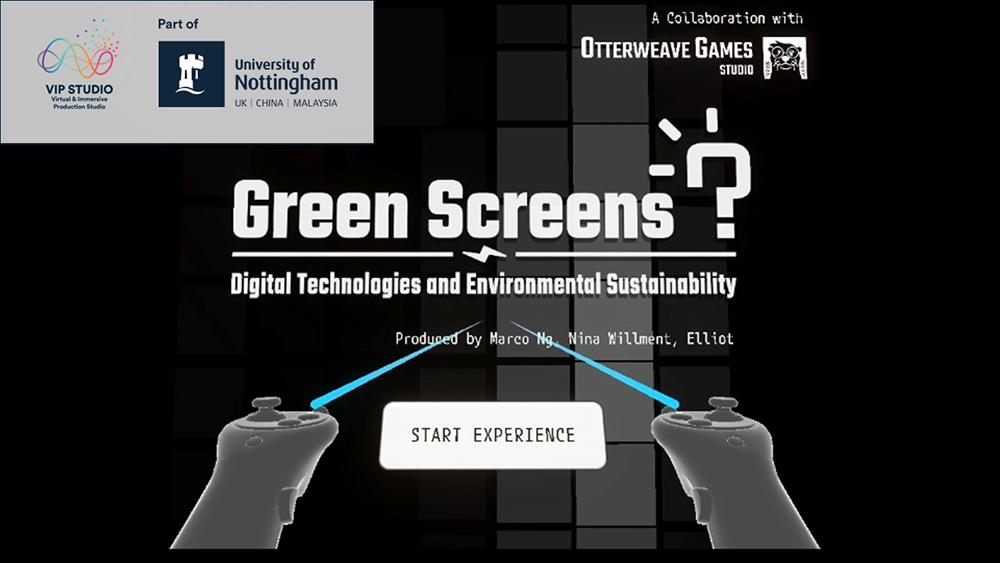

Figure 1: Image of ‘Green Screens? Digital Technologies and Environmental Sustainability’ immersive experience. Copyright: Richard Ramchurn/Virtual and Immersive Production Studio.

I am a cultural and economic geographer, who is interested in the intersections between digital technologies, audiences and climate action. I therefore wanted to use this six-month VIP Residency to investigate the impact of VIP technology as a medium for telling sustainability and climate change related stories. The aim of the residency was therefore to explore audience interaction with VIP hardware and climate stories, connections to other everyday digital devices and the sustainability challenges (and circular economy potentials) associated with immersive and digital technologies.

Drawing together a virtual reality experience, holograms and discarded TV sets with floppy discs, the culmination of six months working in (and with!) the VIP Studio, learning, playing, testing and R&D culminated in the creation of a twenty-five-minute immersive experience entitled ‘Green Screens? Digital Technologies and Environmental Sustainability’.

Green Screens? confronts public audiences with the globalised social and environmental impacts of our insatiable appetite for digital technologies. To begin, virtual reality transmits audiences inside of the VR headset they are physically wearing, breaking down this digital technology into its component parts. Each of these component parts represents one example of the devastating environmental and social impacts of digital technology supply chains, from cobalt mining for digital technology batteries, to the e-waste dumps of discarded technology.

From the VR, audiences are transported to the nostalgic setting of teenage bedroom desk, littered with analogue reminders of a hopeful, environmentally sustainable future. Through the medium of hologram, audiences are subsequently introduced to the circular economy as a useful methodology for how we might shift away from our voracious digital consumerism. Moments of individual reflection are encouraged during the experience, with audiences having opportunities to write individualised climate pledges on floppy discs and to post these commitments through an old TV set.

Figure 2: Opening scene of ‘Green Screens’ Digital Technology and Environmental Sustainability VR experience. Credit: Marco Ng/Otterweave Games Studio.

I wanted to undertake this VIP Residency project and create the Green Screens? experience, as although immersive technologies offer huge potential for climate education, they can have their own significant carbon footprint and contribute to e-waste (Woodhead, 2023).

At present, there has been very little critical reflection on the sustainability challenges and potentials of the virtual and immersive production (VIP) industry, especially reflections which use VIP technologies themselves to tell these critical sustainability stories.

Green Screens? alsoasks questions of ‘creative making’ as a methodological process for how we can transform environmental sustainability and climate research into climate-informed immersive experiences, which inspire public audiences into personalised climate action. Through the practice of ‘creative making’, the development of the experience aims to create a critical response to the increased situating of digital and immersive technologies as inherently ‘green’ alternatives, instead demonstrating to public audiences the widespread environmental issues associated with digital technologies manufacture, use and disposal.

![]() 00:00 00:00

00:00 00:00

This project simply could not have existed without the technical and knowledge capabilities of the VIP Studio. Throughout the project, the VIP Studio gave me the space and time to be able to try out different immersive technologies to be featured within the experience, from motion capture to hologram design. The VIP team were invaluable in helping me translate my storytelling to technical realities, helping me to incorporate elements such as 3D scanning to bring the storytelling to life.

‘The VIP Studio provided me with the opportunity to develop my skillset and knowledge of how to innovate and work with immersive technologies. Through the support of the studio, working alongside amazing academics and artists in a collaborative, nurturing and fun environment, I was able to develop my creative practice and research trajectory in novel and career defining ways. None of this would have been possible within the support of the VIP Studio’.

Green Screens? has subsequently been showcased at Trinity College Dublin, Nottingham’s Theatre Royal and at Green Hustle Festival in Nottingham. The experience has also been submitted to Aesthetica Film Festival in York, and for the Lumen Prize (a prize celebrating the innovative intersections between art and technology).

With huge thanks to the VIP Team (Professor Helen Kennedy, Dr Sarah Martindale, Dr Paul Tennent, Dr Richard Ramchurn, Tamsin Beeby, Siobhan Urquhart, Matt Davies), the other VIP Residents (Dr Lidoly Chávez Guerra, Marina Wainer, Dr Jen Bell, the SHAPESHIFTER team and Joe Strickland), and my wonderful collaborative team for Green Screens? (Dr Alexandra Dales, Jack Chamberlain from Jack Chamberlain Creative, Elliot Mann and Marco Ng from Otterweave Game Studio).

SHAPESHIFTER: Exploring the intersections of gender, identity, and nature through a mixed reality experience combining storytelling and free play

Investigating how mixed reality can explore themes of gender and identity to provoke thought and enhance understanding of the natural world's diversity, while also scaling the experience to accommodate multiple audience members in an immersive environment that combines free play and storytelling.

Image credit: Freyja Sewell

SHAPESHIFTER is a location-based experience where a live actor and audience share the same physical and virtual space. Leveraging live performance capture the actor performs as a 3D animated character within a mapped virtual environment, guiding a small audience of ‘live players’ equipped with VR headsets through an immersive adventure exploring notions of gender and identity in the natural world.

SHAPESHIFTER is a collaboration of multidisciplinary artists from the worlds of theatre, opera, digital art, biophilic design and immersive media:

- Maggie Bain: Co-Creative Director and Actor

- Ruth Mariner: Co-Creative Director and Narrative Design

- David Gochfeld: Technical Director

- Freyja Sewell: Art Director

- Lucy Wheeler: Unreal Developer

- Toki Alison: Producer

In developing this piece, the team is exploring how motion capture, 3D avatars, and game engine environments could provide new perspectives on the natural world while addressing societal questions about gender representation and identity. The goal is to do this in a way that is both playful and emotionally truthful, ensuring that the technology enhances the storytelling rather than detracting from it.

Our previous work on the project has focused on the performer, the character, and the environment. This included explorations into motion capture techniques for emotional expressivity with non-human characters, and to integrate biophilic forms and details from the natural world into the virtual environment design. But the heart of the experience will be the co-presence of the live performer and audience in shared physical and virtual space, and this is what we wanted to explore in our residency at the VIP Studio.

For this residency, the primary focus was on the technical challenge of aligning the virtual and physical spaces accurately. With multiple audience members wearing VR headsets moving within the same shared space, we need to ensure their positions in the virtual world accurately correspond to their location in the physical space, thus avoiding collisions and enhancing the perception of co-presence. Using the motion capture system would allow us to track all of the audience members, so they could see each other represented as avatars in the virtual world.

Beyond this key technical challenge, we wanted to use the residency to understand more about how the different layers of reality and representation function in the experience, and how we can use those to create an engaging and immersive experience that helps tell the story.

Image credit: Joe Bell/Virtual and Immersive Production Studio

The collaboration with the VIP Studio has been pivotal to the project's development. The studio's technology— particularly the Vicon mocap system, facial performance capture helmets, powerful graphics computers, and Quest VR headsets – allowed us to test all the elements of the project. But even more importantly, the Studio team’s experience and understanding of digital and immersive performance gave us the confidence to experiment and try new ideas.

Dr Richard Ramchurn’s tireless support and ‘can do’ inventiveness was invaluable in helping us tame the multiple layers of recalcitrant technology and overcome the key technical hurdles – enabling us to find a solution to align the physical and virtual spaces and test the co-present experience for both the actor and audience.

With this working, we could begin looking at the affordances of mixed-reality and how the physical and virtual worlds interact with each other. We were able to test different kinds of interactions within the virtual environment, and see how they contributed to the experience, and how our test audience responded to them. We also experimented with tracked physical props corresponding to virtual objects, which unlocked a surprising range of playful interactive possibilities.

Dr Sarah Martindale and Dr Lidoly Chávez Guerra were wonderfully game at helping us test the experience and gave us invaluable feedback. And the most rewarding part came at the end of the last day of our residency, when Dr Jen Bell brought her family into the studio. It was delightful watching her children explore and play within the environment we’d created – discovering the extra-dimensional pods and interactable triggers hidden in the environment, playing catch with the tracked prop (a pillow), and reacting to the sudden appearance of the main character, Puca.

These testing sessions allowed us to begin to see how effectively mixed reality performances could affect and engage audiences. This exploration was crucial in validating mixed reality's potential as a medium for powerful storytelling and audience involvement.

SHAPESHIFTER aims to explore the concept of 'Biological Exuberance'—the idea that nature is inherently diverse and magnificent, often more so than we typically acknowledge. The project seeks to challenge conventional perceptions of gender as binary by using nature's diversity as a metaphor. Through this mixed-reality experience, audiences are encouraged to reflect on their own notions of gender and identity and reassess what they consider 'natural.'

During the residency, the team also developed the script, experimented with biophilic design principles for virtual environments, and explored dramaturgical opportunities for audience interaction. This comprehensive approach aimed to build an emotional connection with the character and the story, thereby enhancing the overall immersive experience.

Shapeshifter exemplifies how mixed reality can be used to challenge societal norms and inspire audiences to see nature—and themselves—in new ways. With the support of the VIP Studio, the project has made significant advancements, reaffirming the potential of extended reality technologies in creating engaging and impactful theatrical experiences.

Our 3D avatar Pùca—a shapeshifting, nonbinary nature spirit from Celtic folklore—serves as a bridge between humanity and nature, encouraging audiences to embrace the magnificence of diversity. As the project evolves, it promises to pave the way for other artists to adopt XR technologies in theatrical performances, fostering inclusion and environmental consciousness.

The next stages of Shapeshifter will focus on perfecting the narrative structure and leveraging the insights gained from the residency to secure funding and support for further iteration and touring. As we move forward, we are excited to continue refining these elements and incorporating additional science-based details and playful interactions to support and elevate the narrative.

With immense gratitude to the VIP Team at the University of Nottingham—Professor Helen Kennedy, Dr Sarah Martindale, Dr Paul Tennent, Dr. Richard Ramchurn, Tamsin Beeby, Siobhan Urquhart, Matt Davies, the other VIP Residents (Dr Lidoly Chávez Guerra, Marina Wainer, Dr Jen Bell, Dr Nina Willment and Joe Strickland), —and the dedicated Shapeshifter team.

FINELINE residency at the VIP Studio

Lumo Company: Contemporary Circus Performance in VR

Welcoming our first international artists to the VIP studio for an intensive week of creative innovation followed by post-production resulting in a ground-breaking demo of digital circus performance.

Image copyright: Mikki Kunttu

FINELINE is an incredible live show by Finnish contemporary circus performers Lumo Company. It combines amazing tight-wire and aerial work with a fabulous original score performed by the musician live on stage, with a spine-tingling finale. The show toured venues in Europe during 2023 thanks to a grant from the Nordic Culture Fund. Alongside the live dates, the project explored ways to extend the reach of the show using digital technologies like online streaming and NFTs. This included a three-day R&D residency at the Virtual & Immersive Production Studio (based at University of Nottingham) to capture parts of the show for presentation in Virtual Reality. This visit formed part of Sarah Martindale’s Nottingham Research Fellowship, as explained in this video documenting the residency.

The Lumo team of two circus performers, the musician, sound designer and producer arrived in Nottingham with their specialist tight-wire rig. Once installed in the VIP studio it took up most of the space. The plan was to experiment with the various immersive production technologies available. The first priority was to try out volumetric video capture. By positioning 10 specialist depth cameras around the equipment it was possible to capture the performance from multiple angles. The footage was then combined using Depthkit software to create a three-dimensional recording that could be positioned and manipulated in virtual space. Using the studio’s state-of-the-art Varjo augmented reality headset we were able to play with turning the performers into giants filling the space and miniaturise them to fit in the palm of your hand.

Other forms of performance capture were also utilised during the residency. 360 degree camera footage puts the viewer in the centre of the action, with a completely different perspective on the incredible skills displayed. Motion capture of live acrobatics allows performers to be overlaid with computer generated avatars. Spatialised audio means different sounds can be triggered by movement, for example hearing an internal monologue when you’re close to a performer. All these techniques have subsequently been combined to produce a VR demo, thanks to a prototyping grant from the XR Network+. This experience showcases the range of immersive formats in which circus performance can be presented. Users can navigate between excerpts from the show, each staged in its own generative AI environment. Our collaboration continues as we work towards producing a digital version of FINELINE that could tour to arts venues and festivals.